Filter-Based Reconstruction of Images from Events

Oct 22, 2025·

·

0 min read

·

0 min read

Bernd Pfrommer

FIBAR

FIBAR

Abstract

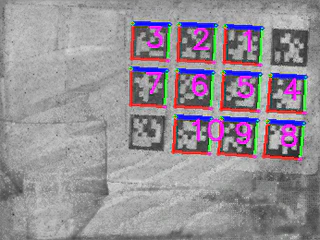

Reconstructing an intensity image from the events of a moving event camera is a challenging task that is typically approached with neural networks deployed on graphics processing units. This paper presents a much simpler, FIlter Based Asynchronous Reconstruction method (FIBAR). First, intensity changes signaled by events are integrated with a temporal digital IIR filter. To reduce reconstruction noise, stale pixels are detected by a novel algorithm that regulates a window of recently updated pixels. Arguing that for a moving camera, the absence of events at a pixel location likely implies a low image gradient, stale pixels are then blurred with a Gaussian filter. In contrast to most existing methods, FIBAR is asynchronous and permits image read-out at an arbitrary time. It runs on a modern laptop CPU at about 42(140) million events/s with (without) spatial filtering enabled. A few simple qualitative experiments are presented that show the difference in image reconstruction between FIBAR and a neural network-based approach (FireNet). FIBAR's reconstruction is noisier than neural network-based methods and suffers from ghost images. However, it is sufficient for certain tasks such as the detection of fiducial markers. Code is available at “https://github.com/ros-event-camera/event_image_reconstruction_fibar".

Type

Publication

arXiv:2510.20071